Data-driven development: Managing and automatically processing huge amounts of data for autonomous driving

Jul 21, 2021 by Matthias Zobel

Jul 21, 2021 by Matthias Zobel

Autonomous Driving (AD)

functions and Advanced Driver Assistance Systems (ADAS) perceive traffic and the

environment using cameras, lidar (Light Detection and Ranging), and radar sensors. Any defects or

disturbances to the sensors could lead to unintended system behavior. For example, dirt from the

road or snow and ice in wintery conditions could block the sensor or limit its ability to perform

correctly. This blockage and subsequent impairment could prevent the sensor from detecting relevant

objects, or could lead to misidentification of the blockage as objects that are not actually

present.

This is why one of the requirements for such sensors, is to have a monitoring mechanism that detects

any loss in the perception-performance as a result of occlusion; the sensors monitor themselves to

detect any disturbances that could impair their ability to sense and interpret the environment

accurately. In severe cases of lost perception, countermeasures like automatic cleaning and

disabling of system functionality can be initiated.

To prove and validate that the blockage-monitoring of a sensor works as expected, the detection’s

output needs to be compared to a known reference (called ‘ground truth’) but how do you obtain this

ground truth? One way is to watch the sensor with an external camera during real operations, record

both camera and sensor data, then analyze the performance of the sensor’s blockage detection by

comparing it to the video footage of the sensor.

Take, for example, a lidar sensor mounted at the front of a car. The lidar works by emitting and

receiving lasers beams through a front surface (which is internally monitored for

defects/blockages). To obtain the ground truth for this lidar, engineers mount an additional camera

to record videos of the sensors’ surface. These videos are processed automatically by computer

vision algorithms to detect and mark areas that have become dirty or occluded over time. Finally,

these identified regions are collected, stored, and used for the validation of the lidar’s own

blockage detection mechanism.

To cover as many scenarios as possible, a huge amount of video data needs to be automatically

processed and managed efficiently — that of hundreds to thousands of driving hours. Processing this

data requires compute power, huge storage and flexibility. Our implemented solution uses Adaptix

Solutions

Robotic Drive and leverages the benefits of the AWS cloud services for elastic compute and storage.

It consists of a subset of Adaptix Solutions Robotic Drive software components, which run in the AWS

cloud on

top of AWS managed services.

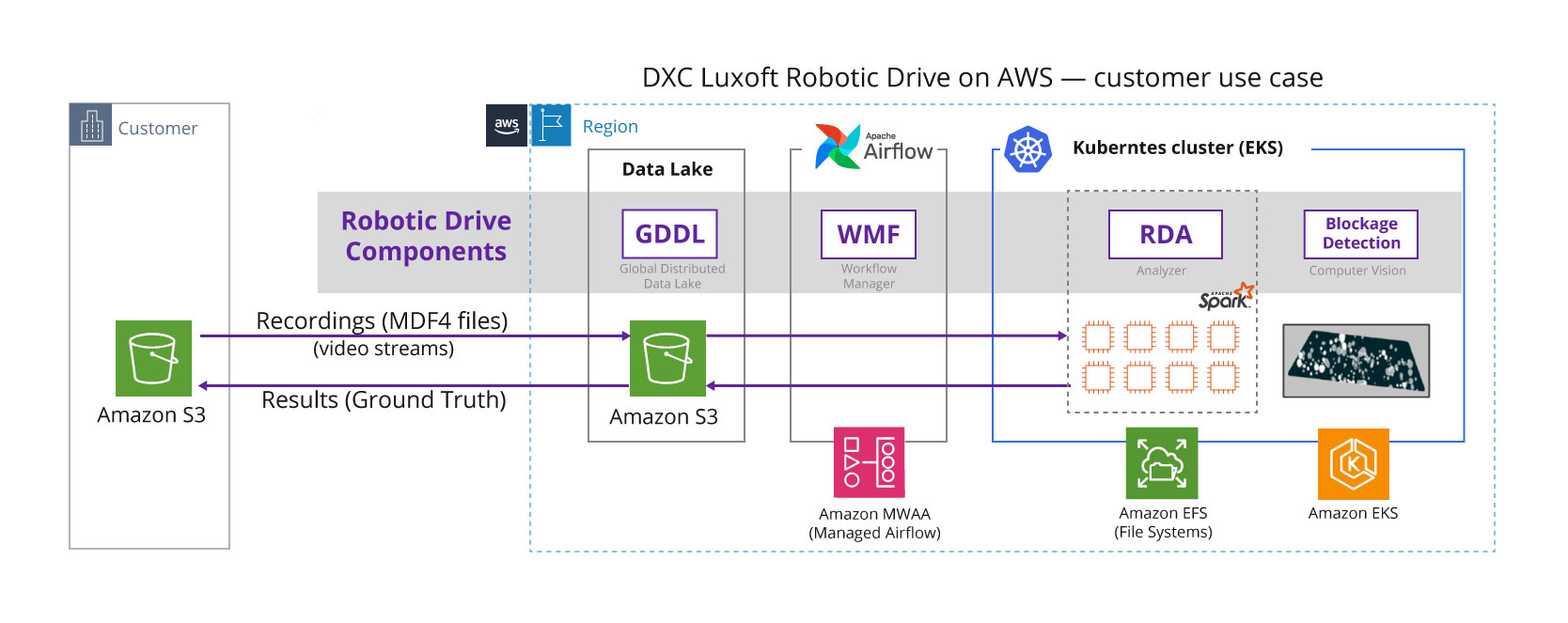

The customer provides the video-recording files in MDF4 format (the standard for automotive) in a

customer owned S3 bucket, which is then synchronized to a Adaptix Solutions owned S3 bucket. New

files

automatically trigger a job in the RD Workflow Manager (WFM) component. WFM itself leverages the AWS

Managed Workflow for Apache Airflow (MWAA) cloud service to reduce complexity.

The workflow automatically initiates processing of the MDF4 files with the RD Analyzer (RDA)

component. RDA runs in an AWS EKS Kubernetes cluster and can process many files in parallel. The

size of the Kubernetes cluster is automatically increased or decreased based on the need, it

leverages AWS EC2 Spot instances to keep the costs low.

The RDA component extracts the images from the video stream and passes them to a Adaptix Solutions

developed

computer vision algorithm, which analyzes the images and detects blockages. The results represent

the ground truth information for the video data — they are passed back to the Adaptix Solutions S3

bucket and

then automatically to the customer S3 bucket.

The whole process is end-to-end automated and provides a convenient, on-demand ground truth labeling

service — simply upload new video recording files into an S3 bucket and then automatically receive

the related result files in the same S3 bucket (after the processing time). The solution

significantly simplifies and quickens AD workflows.

Our use case is an example of how Robotic Drive software components and services can be optimized

together with the AWS cloud to quickly deliver lightweight AD solutions which provide business

value, simplicity and flexible scalability as needed.

The solution uses proven AD software components and eliminates the need to create and manage complex

infrastructure. It leverages AWS managed services underneath (where possible) to keep the total cost

of ownership low, and improve the flexibility of how fast the solution can be changed to address new

business needs.

Speed is an important business advantage in today’s competitive world. To double down on this

advantage, the innovation cycle can be accelerated by leveraging AWS out-of-the-box services and

creating all infrastructure and deployments in a fully automated way from code. Are you ready to get

ahead?