In brief

- Metrics for developer productivity are desired by executives and procurement functions to demonstrate the return on project investment and assess their organizations

- However, measuring individual developer productivity is a complex challenge, with a gap between executives' need for metrics and developers' resistance to being measured

- Good metrics are objective, easily understood, and designed to encourage desired behaviors. Metrics that are used to determine individual performance or as a basis for rewards can lead to counterproductive behaviors and hinder collaboration

- The Adaptix Solutions CTO Advisory team seeks to find a middle way to satisfy the needs of executives whilst respecting developers and acknowledging the constraints that they work within. This article considers developer productivity measures that enable our developers to shine and shift the conversation away from a focus solely on day rate

Measuring developer productivity recently hit the headlines within the global technology industry when McKinsey published an article [1] and Gergely Orosz & Kent Beck [2], world-renowned developers, responded.

Both articles agree that measuring individual developer productivity is difficult. The McKinsey article indicates that there is a growing need for developer productivity metrics. The Orosz and Beck paper highlights a number of flaws with the McKinsey approach.

Both papers indicate a chasm between executives with a legitimate need for a framework to measure developer productivity, and developers with a deep distrust of any attempt to measure productivity. The Adaptix Solutions CTO Advisory team seeks to find the middle way, satisfying the needs of executives in a way that respects developers and acknowledges the constraints that developers work within.

ADAPTIX specializes in providing high-quality technology teams in lower-cost locations. As such we are strong advocates for developer productivity measures that enable our developers to shine and shift the conversation away from one that only focuses on the day rate.

The Adaptix Solutions CTO Advisory team is developing a CTO Dashboard that addresses the developer productivity challenge. It is the first in a series of CxO Dashboards that support conversations about progress and act as an “invitation to the Gemba for executives” [3], rather than simply encouraging decision-making.

This white paper addresses the following issues:

- Why executives need developer productivity metrics

- What makes a good metric

- A straw man for developer productivity metrics and the Adaptix Solutions CTO Dashboard

- Gaming the metric

- Problems with common metrics

- Next steps toward an industry standard developer productivity metric

Why do executives need developer productivity metrics?

Executives claim to need developer metrics for several reasons. Some are valid; others are less so. Metrics can help in some cases, and cause mayhem in others.

Here are some genuine reasons why executives need developer productivity metrics:

- Procurement. Without a developer productivity metric, the organization’s procurement partners use only the metric that they do have, which is the cost or developer day rate. This leads to a race to the bottom, with suppliers providing developers with increasingly less experience and skills to reduce costs

- Improvement. Organizations spend a huge amount of money on development improvement programs, whether they are called “Cloud”, “Agile”, “Way of Working” or “DevOps”. Investors need a developer productivity metric to report that the investment delivered a decent return so that they can justify future investment in improvements

There are other reasons why executives say they need an engineer productivity metric or score, which typically result in mayhem.

Performance

Using a development performance score to determine the performance of an individual, with the associated rewards, bonuses and promotions, is damaging to the organization and prevents it from achieving its goals.

In 2013, Skype showed its data to the Chief People Officer at Microsoft, its parent. Partly because of this, Microsoft scrapped stack ranking for all its employees. Individual rewards or recognition leads to people optimizing their own rewards and forms a blocker to collaboration.

Individual performance also ignores the fact that much of the individual developer productivity is a result of the team that they are in, not their personal mental capacities, skills, or capabilities. Much of the productivity of a team depends on the teams they are working with to deliver an investment. Much of an investment's productivity comes from the system it is developed in.

Who to fire

Using a development performance metric to determine who to fire will guarantee that the metric is gamed hard and becomes useless. In the event of a turndown, consider the advice of Mark Gillett and simply introduce a hiring freeze that will result in a reduced headcount due to natural attrition.

Other reasons

The McKinsey paper mentions four other reasons, none of which would benefit from an engineer productivity metric.

The first three are best handled by the team, and executives should simply support the teams.

The fourth, “How can we know if we have all the software engineering talent we need?”, is best handled with capacity planning. It helps product/business leadership understand which teams are a constraint on the organization’s ability to deliver value.

What makes a good metric?

Before we discuss a good developer productivity metric, we need to list several rules we should consider when choosing one:

- Rule 1. Good software developer performance metrics are objective; they are not subject to interpretation. Good metrics are consistently defined. This is why story points and velocity are so terrible — each team has its own definition of them

- Rule 2. Good metrics act to help those using them better understand and make sense of things. This means easily accessible and inaccurate metrics may be more useful than esoteric and precise metrics that are not understood. For example, “Multiply the output by the probability of it happening” is better than using Black Scholes (What is Black Scholes?... exactly the point)

- Rule 3. All metrics are gamed. So, rather than pick a metric, we first start by thinking of the behavior we want, then design the metric so that when it is gamed, we get the behavior we want. Lead Time or Cycle Time is a classic example of this. The easiest way to reduce Lead Time/Cycle Time is to build smaller investments. Making smaller investments is what we want the teams to do

- Rule 4. To be meaningful, developer productivity metrics need to exist in codependent groups: for example, Business Value, Lead Time, and Quality. Ignoring any of the three can lead to gaming. For example, it is easy to deliver high-quality code quickly if it delivers no value. Similarly, it is easy to produce high-quality code that delivers value while taking a long Lead Time

A straw man for developer productivity metrics and the Adaptix Solutions CTO Dashboard

To demonstrate whether a development organization has improved, it must deliver an investment that represents business value, of sufficient quality, and within reduced Lead Time.

Clearly, the development organization cannot be held accountable for the business value delivered — that is the responsibility of the business/product organization. This means that profit, revenue, costs, customer satisfaction or market risk measures cannot be used to measure the effectiveness of the technology organization.

The developers can express their opinion about what they are asked to build, and express it quite loudly. But fundamentally, the business/product organization decides what is built and, as a result, the business outcome.

This leaves two developer metrics to measure productivity in software development: Quality and Lead Time.

Quality

Quality is the number of escaped defects and resulting impact. It is easy to understand that Quality is a measure of the system rather than an individual developer. Yes, a developer may have introduced a defect that ended up impacting a customer, but the rest of the system should have caught that defect.

Lead Time

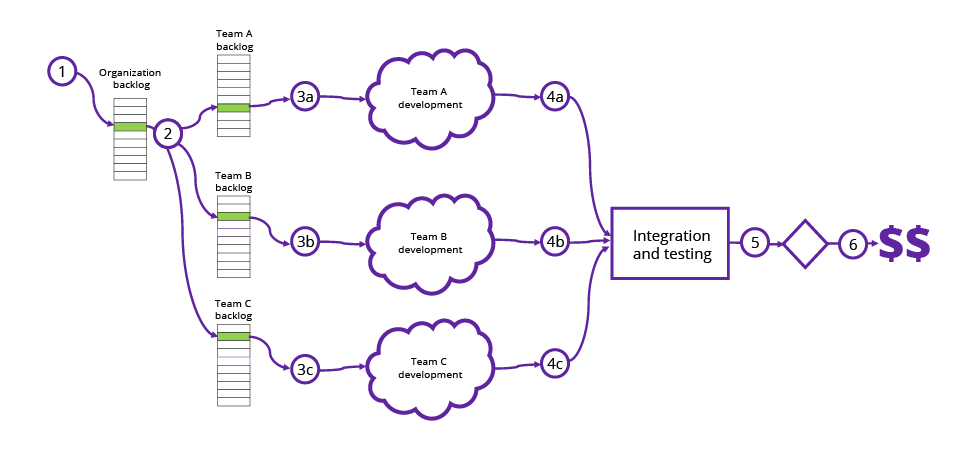

Lead Time is a measure of how long it takes a development organization to deliver an investment. But where do we start and stop the clock? Consider the following points in time:

Lead Time is (1) to (6), or Concept to Cash. The problem is that it violates Rule 3. The easiest way to game the metric is by hiding things in a shadow backlog until they are worked on. As a result, we do not have a clear view of the future backlog, which makes capacity planning impossible to do.

For this reason, we choose (3) to (6), from the point when any team starts working until the investment delivers value. The business/product organization should determine when the technology organization has delivered something of value (6), or simply a fragment of what is needed (5). In effect, the business/product organization acts to stop the clock on an investment, declaring that it has delivered value.

This gives us the Lead Time for a single investment. However, we cannot compare it to other investments to see if things have improved or not.

Luckily, we can use Weighted Lead Time, which is based on the duration in bond math to compare investments and aggregate them. At this point, we invoke Rule 2 and state that this is too complicated for a discussion between technical and non-technical executives.

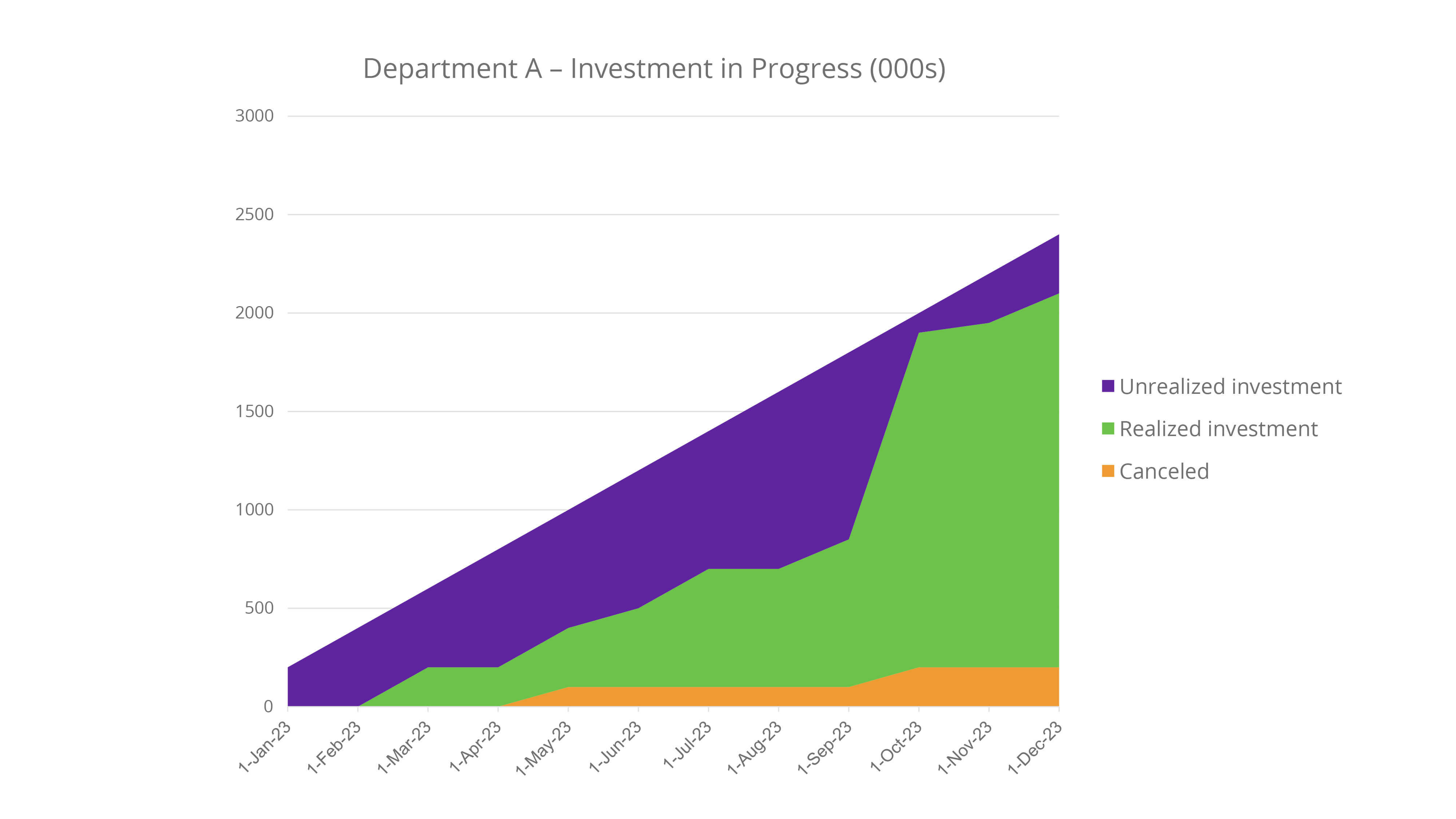

Investment in Progress

Another way of looking at Lead Time is to consider Investment in Progress. Investment in Progress is the amount of money invested until it delivers value. The longer an investment takes to build, the more money is invested. Investment in Progress and Lead Time are proportional to each other.

Investment in Progress can be used to look at the effectiveness of the entire technology investment portfolio:

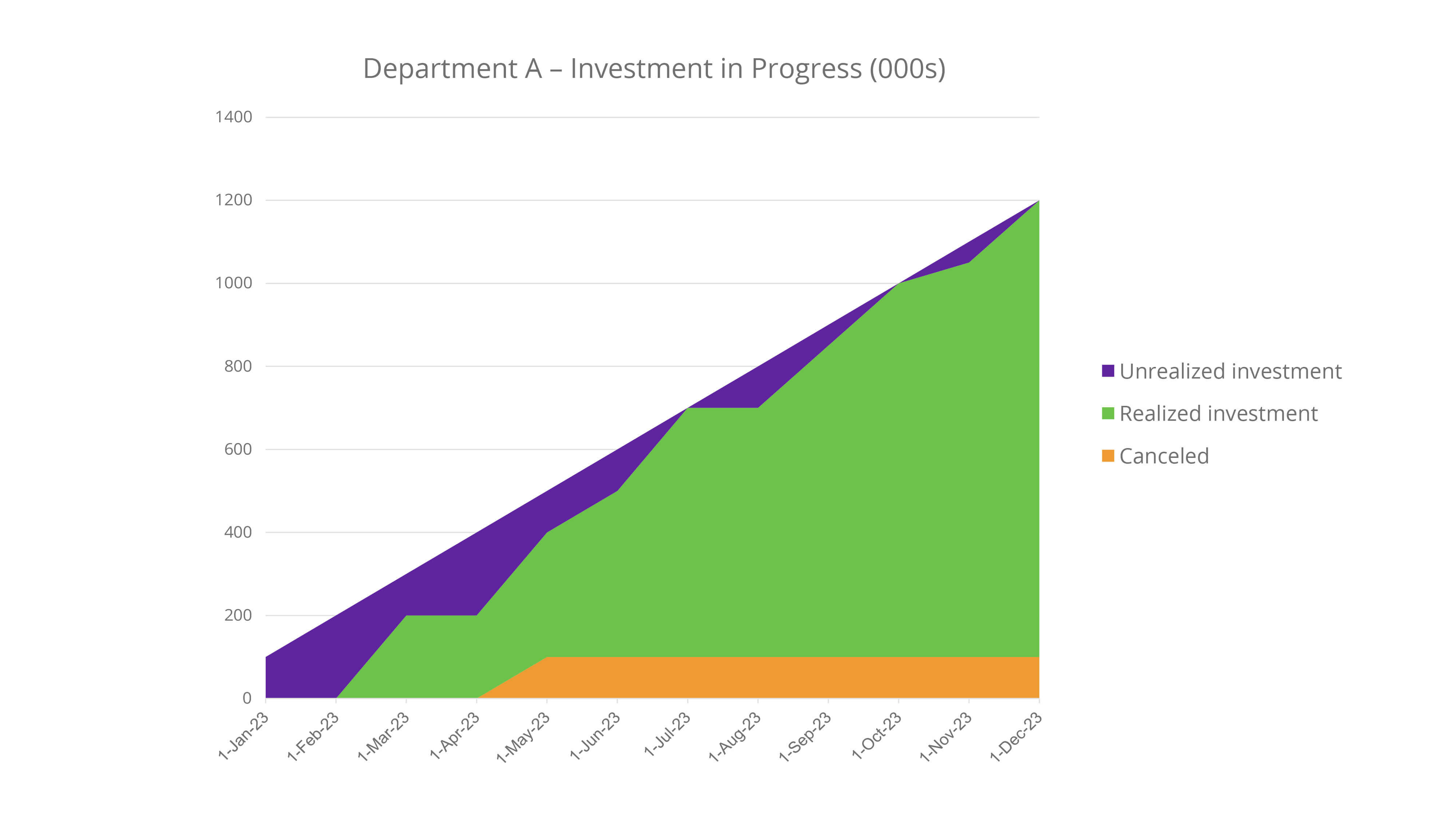

We can drill down to look at each investment, department, team or product. It allows us to understand how they are performing in terms of taking investment dollars and converting them into business value for the enterprise.

Some will show effective investment that delivers value faster:

And others will show where investments have been less effective:

The business/product organization can discuss the effectiveness of technology delivery using Investment in Progress. As a metric, it encourages teams to work together to deliver value faster, which is a desirable behavior.

Although we can use Investment in Progress as a team productivity metric, we find that Weighted Lead Time is more actionable. And we invoke the Einstein Rule: “Everything should be as simple as possible, but not simpler.” Weighted Lead Time is the investment cost weighted by the average time from when a team starts on an investment until it delivers value.

4 tools to measure developer productivity

Our recommended software development productivity measurement methods for a team are:

- The Weighted Lead Time for the team within an investment — i.e., the average start time for the team until the investment delivers value

- The Weighted Lead Time of all investments that the team contributes to — i.e., the average start time for all teams until the investment delivers value

Whilst the first indicator measures the team's effectiveness, it needs to be balanced with the team's ability to collaborate and support other teams involved in the investment.

These two Lead Time indicators should be combined with Quality metrics:

- Number of escaped bugs

- Number of production incidents

In the case of all four metrics, less is always more.

The goal for each team would be to show that they have reduced each metric down to a target level set by the product organization or business sponsor. They then need to hold it at that level or continue to improve if they choose to.

What about the productivity metric of a single developer? That would be the same as the developer productivity metric of the team.

The problem comes when you want to assign a metric to an individual in the team. It impacts the way that the team works, forcing them to work in a particular individualistic and non-collaborative manner.

Investment in Progress and Weighted Lead Time acknowledge the truth about developer performance... Higher-performing developers deliver value sooner and more safely [4].

Gaming the metrics

When we assess developers using metrics, we should consider how people will game them. Gaming a metric means that people follow the letter of the law but do so in a way that minimizes their effort.

Building smaller investments

The easiest way to game the Weighted Lead Time is to build smaller investments. Instead of building one big investment, we make several smaller investments that build up to the “same” thing. This is a desirable behavior.

Creating smaller investments is something that requires collaboration between the product organization or business sponsors who know what delivers value and the technology organization that knows what is required to deliver an investment.

Delivering partial solutions with no business value

Getting a senior business sponsor to declare business value has been fully delivered when only a part of it has been is fraud. This is not gaming. It is fraud, it is breaking the rules and should be managed by the governance system to ensure that it does not occur. If it does occur, the organization should take appropriate disciplinary action.

Cheating by helping other teams

The teams that realize that delivering end-to-end business value sooner is being held up by other team’s ability to deliver will help that other team. A team that has good DORA metrics might help a sister team to improve their DORA metrics so that the other team can deliver sooner. This is a desirable behavior.

Working closely with other teams

Rather than wait for another team to finish before a team starts, the teams collaborate closely together, working in parallel with regular touchpoints. Issues and bugs are addressed in near-real time. This is a desirable behavior.

Helping teammates to improve

Rather than depend on one person to perform one task on the team with the resulting blockages, the team members cross-train each other. As a result, several people on the team can perform any task. This is a desirable behavior.

Pushing for focused action

Rather than work on many investments at a time, the teams push for organization-wide ordering of investments. The business sponsors are forced to come together to agree on the order in which investments will be delivered. This is a desirable behavior.

Problems with common developer productivity metrics

This section looks at the problems associated with the commonly used performance metrics for software developers.

Business Value metrics (outcome and impact)

Business Value metrics determine the ultimate success or failure of an investment. It can be determined once an investment has been delivered by the technology organization.

The investment can be meant to increase profit, revenue, sales, customer satisfaction, or the number of customers, or reduce costs, operational risk, or market risk. The fulfillment of its purpose can be tracked by looking at the metric with the investment superimposed to show when the latter should improve the metrics.

The Adaptix Solutions CEO Dashboard below shows this.

Business Value metrics should not be used to determine developer productivity as developers do not determine what they should build and for whom. The product organization or business sponsors do. They determine when something of value has been delivered, and they stop the clock for Lead Time.

Effort and Output

The McKinsey paper suggests Effort and Output as developer productivity metrics. The Gergely and Beck papers provide a clear argument for why Effort (inputs) and Output are not a good measure for productivity in software engineering.

Effort is a quite common approach to measuring developer productivity. It can be determined by the hours worked, story point throughput, story throughput, or some measure of “function points”.

Story points or counts are a particularly poor measure as they are specified by the development teams and are easily gameable as a result. That is before we realize that it is not possible to standardize them as a framework across teams.

Effort- and Output-based metrics such as the DORA metrics are incredibly valuable as tools to diagnose systems of delivery and help identify how productivity can be improved. They are not valid developer productivity metrics, though.

SpotiFAANG recruitment process

Developer productivity in an enterprise with highly productive developers is not an issue. When every developer has been through a rigorous set of interviews and tests by some of the top developers in the industry, you can guarantee you have high-performing developers.

In the high-performance end of the industry, it is common for an organization to take fewer than one out of every one hundred high-performing applicants.

Other, less high-performing industries and enterprises that are less dominated by innovation, also need to measure performance and determine what is developer productivity to them.

They need a metric that does not differentiate the top 1% as SpotiFAANG recruitment does. Instead, their chosen metric should help the enterprise understand whether a developer's performance is in the top 50% or top 80% so that they can pay accordingly.

Next steps toward an industry standard developer productivity metric

Adopting Weighted Lead Time and Investment in Progress to measure and communicate developer productivity is our recommendation to our clients.

However, that is our opinion, and it may be at odds with our competitors in the high developer productivity sector of the technology consultancy space.

To that end, we intend to invite our competitors and some industry experts to join us in a series of workshops to develop a better metric for measuring developer productivity other than simply using the “1 / day rate”.

Would you like to join us?

In conclusion

Executives need developer productivity metrics to support strategic decision-making. We thank McKinsey for bringing this issue to the attention of the developer community.

We thank Gergely and Kent for making everyone aware of the complexities associated with software development productivity measurement, highlighting many of the pitfalls.

ADAPTIX CTO Advisory recommends the use of Investment in Progress / Weighted Lead Time and Escaped Defects, all of which will be available in the CTO Dashboard.

We recognize the need for an industry standard. More importantly, we recognize the need for advice on where and when such metrics should be used and for what purposes, as using such important metrics in the wrong context can cause damage to an organization.

Please contact your Adaptix Solutions account manager if you would like to discuss any of the above in more detail.

References:

[2] https://newsletter.pragmaticengineer.com/p/measuring-developer-productivity

[3] https://blog.jabebloom.com/2012/02/10/the-management-interaction-gap

[4] “Sooner Safer Happier: Antipatterns and Patterns for Business Agility” Jonathan Smart et al.