15 = 3×5 primary school for scientists or a near-term technological disruption?

Sep 6, 2022 by Dr. Ulrich Wurstbauer

Sep 6, 2022 by Dr. Ulrich Wurstbauer

Does this calculation ring a bell with you? Then there’s a good chance you either have children in

elementary school or you’re aware of an important milestone toward quantum computing. It was the

first experimental prime number factorization based on Shor’s algorithm, which was done using qubits

less than 2 decades ago1.

Consider also that it’s very common for new technologies

to need to grow significantly in order to really leave academic research and enter business related

fields. Obviously the same applies to quantum computation, but when is a good time to

start looking at this emerging technology from business perspectives?

Strong evidence

that the technological maturity level within quantum computers has changed can be deduced from the

fact that companies building quantum computers publish — and stick — to the announced quantum

computing hardware roadmap2. It is truly impressive that hardware companies manage to

deliver certain milestones on this hardware roadmap and are entering the 100s to 1000s qubits in the

next 1-3 years3. Also, very recent reports show that hardware architectures like the

ones for IBM’s Osprey and Condor quantum systems, enable scaling of the semiconductor production

processes — a key ingredient of solving more and more complex tasks as we know from all our

classical computing and programming knowledge.

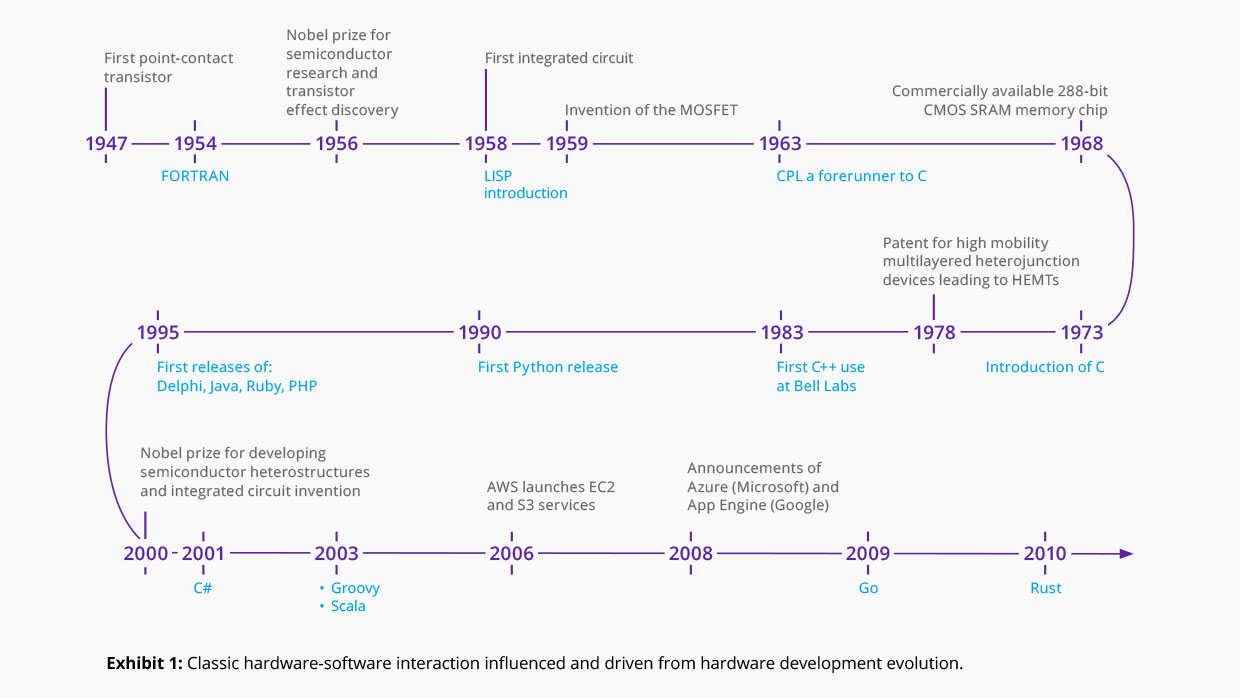

Over the course of decades, hardware roadmaps

have always been a major driver of the software landscape as seen in Exhibit 1: Classic

hardware-software interaction influenced and driven from hardware development evolution.

So, can quantum computing be considered as if it’s in an early stage, comparable to the first

microchips? From formal perspectives you can think of it in such a way, but like everything else

within quantum computing, the pure number of qubit counting view is not that easy.

Why?

First of all, there are — and will be — various technical realizations of qubits. Each

approach has its pluses and minuses when it comes to decoherence time, robustness of the states

influencing error rates and their correction, and scalability, to name a few.

Second, there

are many different companies; from big tech down to startups, looking to improve the current

electrical engineering approaches, but also exploring new artificial material classes with enormous

potential for new computing options.

And third, we’re currently transforming the biggest

chunks of business computing needs to be run on cloud-based systems and that fraction is still

growing. So combining specialized machines, such as a quantum computer, with the already existing

infrastructure is obviously the path to follow — indeed, you can already spin up corresponding

services on your cloud provider of choice.

But does this solve all problems? Of course not,

it’s quite the opposite. This journey is just getting started, but why? Because we need to build up

the knowledge and experience of how to use this new computing hardware properly and learn the pros

and cons when it comes to real application programming.

But hold on, what potential

applications are feasible? One of the most prominent ones, if not the most prominent, is paving the

way to quantum cryptography and security. It’s based on the 15 = 3 x 5 calculation and deals with

the prime factorization of large numbers. Those numbers are the fundamentals of our current

encryption mechanisms. And yes, that has huge impact; think of financial and banking capital markets

as well as your privacy related topics. It also has an impact on all of our mobility needs,

since vehicle makers need to ensure that only approved and safe software gets deployed in their

vehicles (which in the future will be connected, autonomous and software defined).

Quantum

computers are already being used in R&D for new materials. This is especially the case in

the e-mobility domain with all efforts spent on developing new, better and longer lasting

batteries. As a side comment, such chemical targeting usage also holds true for drug and other

material research.

Another typical problem within the automotive industry is route

optimization at scale. All current productive approaches are only capable of solving the problem

from an ego perspective, meaning excluding the interconnection of other traffic participants. With

quantum computing, you can get a bird’s eye perspective as you can optimize the traffic flow

(meaning each individuals’ navigation route) depending on everyone else’s needs. Think about it in

your next traffic jam — how beneficial would it be if we time optimized the use of all the existing

road network options in your metropolitan area?

And then there’s also the large area of data

analytics and quantum machine learning. With respect to this, autonomous vehicle (AV) experts should

at least start digging into quantum based high computing options, if not yet done so already. The AV

development landscape is evolving very fast and potential use cases arise around scenario generation

at scale, including the identification of corner case situations in simulations. This would enable

later in-depth analysis with classical high compute needs. In addition, the field of quantum machine

learning is also showing high potential to speed up learning processes 4 which

allows faster understanding of large amounts of data — a very typical, if not the most,

characteristic challenge for autonomous driving development. In this regard, Adaptix and DXC bring in depth technical expertise to enable our clients to perform

end-to-end developments at scale.

So, building up the right expertise also on quantum

computing is of high value to meet future development needs and will also require us to spend

sufficient time on this new computing option which is already here. We just need to get started and

used to the quaint behaviors of the quantum world.

Sources: